Navigating the Chasm between Reactive and Proactive Data Management

May 28, 2019 Kevin Martin

Earlier this year, it was estimated more than 2.5 quintillion bytes of data are created each day at our current pace, but that pace is only accelerating.

In fact, IBM estimated in 2016 that the annual cost of dirty data in the United States was $3.1 trillion. That's trillion. With a "t".

To put this figure into perspective (sort of), the average annual salary of a data analyst in the U.S. is just more than $57,000. At $57K/year, a data analyst would have to work 52.6 million years to earn $3 trillion.

So, each year, we waste 52.6-million years' work on dirty data.

What's more? Last year, the U.S. Food and Drug Administration issued 5,045 483's for violations to the Food, Drug, & Cosmetic (FD&C) Act. Costing up to $250K a pop for the manufacturer, many of these FDA warning letters could have been avoided by eliminating bad or missing data through the introduction and support of an enterprise-wide data governance strategy.

In my experience navigating the pharmaceutical landscape, I've watched data grow at breakneck speed alongside the exponential expansion of industry innovation. With any aggressive growth comes growing pains. Data management is no different.

So, what is dirty data?

"Dirty" data is a simple concept but a wide umbrella. Often the result of undocumented, inconsistent, and un- or under-regulated data repository practices, dirty data includes but is not limited to:

- Incorrect entries

- Incomplete entries

- Outdated information

- Missing information

- Data stored somewhere outside a central database

- Unverified information

These assets live throughout the data management lifecycle—everywhere from audit trails through metadata. Furthermore, regulation spans the entire infrastructure trajectory, including entire networks; all manufacturing equipment, lab instruments, and monitoring equipment; and processing systems.

In an industry such as ours, where one "minor" entry error could mean a matter of life and death, it's time for individuals to take responsibility for their everyday actions and those actions' effect on the whole company's performance. Furthermore, now more than ever, it's the companies' responsibility to create cultures in which it's possible to adopt enterprise-wide strategies for data governance.

Introducing the Data Maturity Model

The ALCOA framework has been in use in the regulated industry space since the 1990’s. A vital component to Good Documentation Practice (GDP), this review of data governance measures data according to the following qualities: Attributable, Legible, Contemporaneous, Original, and Accurate.

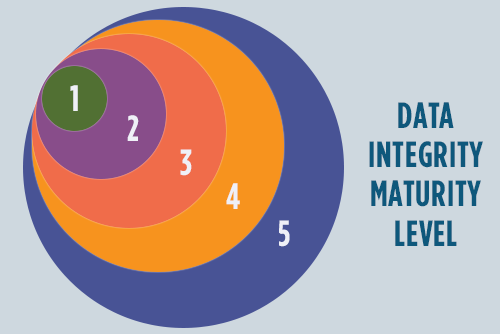

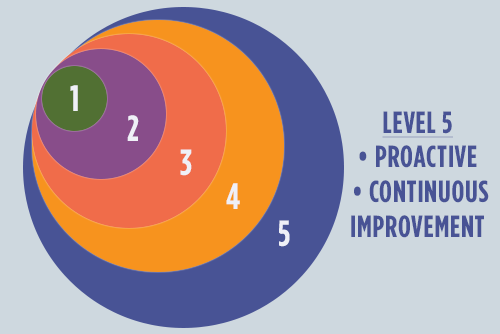

To complement the ALCOA model, experts have looked to the ISPE GAMP Data Integrity Maturity Model ("DI Maturity Model"), which was launched in 2017, as the roadmap to establishing data integrity within an organization. The maturity model is a simplified approach to comparing current and ideal states of data

maturity.

By analyzing people, processes, and technology, regulated companies use the DI Maturity Model to assess their current state of compliance and data governance to identify actions and improvements required to reach the next maturity level, and focusing effort where processes are the weakest. In doing so, they move from a reactive to proactive data integrity practice, putting into place the work practices that lead to overall compliance.

The model may also be used as a rapid and efficient, though detailed, indicator for management to assign resources and work efficiently. The general approach is flexible and may be structured several ways, such as by geography, site, and/or department.

While broken out into levels, it's important to recognize that DI maturity is a continuum rather than discreet levels, and every company will have to tweak the model to align with their own policies and structures. Additionally, processes may span or have characteristics of multiple levels at the same time.

Level One

In many cases, the catalyst for data governance investigation is the result of discovery of non-compliant data or data practices - either internally or from an external source, such as FDA.

In this stage--let's call it the "hero" stage--the quality of data is based on the efforts of one individual or one team who is very good at what they do but works in isolation. Therefore, there is no monitoring of data or evidence to support their processes. While the processes may work within the silo, this technique is completely unsustainable across the organization.

At this stage in the game, it’s up to a hero to kick-start the journey to data integrity--either by force or voluntarily--and enlist a few good partners to begin the uphill journey.

Often isolated and unwilling to report errors, the corporate culture will require some finessing, and it's up to the hero to create a risk-based business case for compliance and date integrity and initiate the first steps toward course correction.

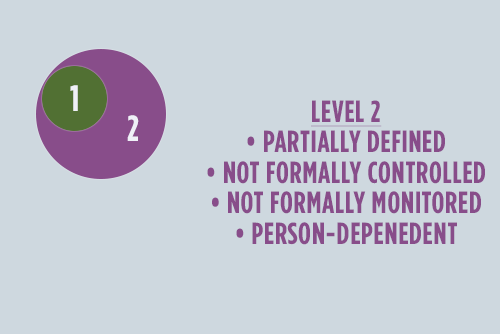

Level Two

Now we're getting somewhere. The hero has created a business case for DI, and select business procedures and documentation are in place. Within the small circle of early adopters, value is realized. The news of this value will spread leading to general awareness, but not necessarily adoption. While still completely person-dependent and unsustainable, recognition of value leads to transparency and the opportunity for improvement.

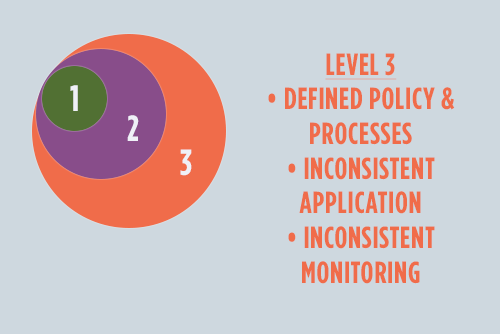

Level Three

So, here we are--level three--the make-or-break of the DI Maturity Model. Let's take a look back and review how far the hero has come:

- They've discovered the issue.

- They've defined a business case and key business processes for improvement.

- They've engaged fellow ambassadors.

- They've illustrated value.

Kudos to the hero! Now is the time to navigate the chasm between reactive and proactive data management. The key? A complete suite of policies and processes, as well as the initiation of executive buy-in.

By now the principles of quality, compliance, and risk-based business objectives are reflected in working practices, and new policies and procedures encourage openness and transparency. However, with little executive buy-in, defined policies are applied and monitored inconsistently.

So, how to get from reactive to proactive? Practice, practice, practice.

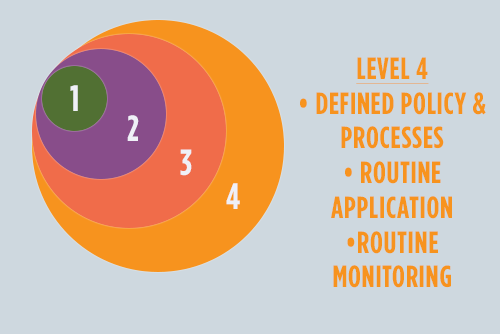

Level Four

The major difference between levels three and four is the consistent and persistent incorporation of DI maturity into daily practice. How? Management behavior changes to reflect these new ideals. Challenged by management to achieve a higher level of DI maturity, the organization will seek proactive ways to implement the processes and behaviors that lead to positive recognition.

After illustrating the value of risk-based, defined policies, staff is trained and an oversight committee is established to ensure that practices are put in place from person to person and department to department.

Bingo! Chasm traversed.

Level Five

The key to moving from level four to level five is the establishment and maintenance of a continuous feedback loop that allows the oversight group to recognize successful best practices, as well as looming issues, in order to proactively navigate a path forward.

Let's get real.

In theory, the DI Maturity Model is an ideal demonstration of how an enterprise can move proactively from a state of disarray to proactive compliance. But, how does this work in real life?

As discussed earlier, leadership and executive action is the main key to climbing the DI maturity ladder. If you don't have your top-level management ensuring that data governance is a number-one priority, then it's never going to be.

Case in point: In the mid-90’s, today’s largest pharmaceutical company proclaimed that, moving forward, they would no longer tolerate FDA 483's. This announcement sparked a major initiative across the organization to asses all computer systems and processes for compliance issues.

What did they look for? Efficiency, quality, and sustainability. Although the looming threat of 483's was the catalyst for action, the ultimate goal was to put into place systemic quality systems throughout the organization. And, the result was not only a vast improvement in compliance, but the creation of a culture that improved not out of fear of failure, but out of the desire to contribute to the company's quality culture.

So, where to start?

There are three ways to begin the journey toward level-five data governance.

The first is the hit-the-ground-running approach provoked when an organization is slammed with a 483. In this case, specialists are made aware of the issues and the first steps forward are fairly well mapped out.

Second, routine internal investigation can uncover opportunities for process improvement.

The third and ideal option is a proactive, risk-based approach to improvement. To do so, an oversight team assesses data practices and processes to identify those with the highest possible risk to establish an order of priority, and, then the work begins.

As a rule of thumb, the closer the product is to the patient, the higher the risk. Therefore, the highest risk areas for pharma/biotech are during the final stages of manufacturing and when a product is being released to a patient or consumer.

Starting from scratch, the entire process for one high-priority area could take anywhere from 12-18 months. However, as a constantly evolving, iterative process, the complete overhaul of company culture is ongoing.

Don’t know where to start? I do. With you.

Your business is in constant motion and growing with tremendous pressure to achieve more with less, while your technology investments struggle to keep up. You know what got you here, but you may not know what will get you “there.” Where to start? People? Process? Technology? Communication? Documentation?

Azzur's IT Advisory Services team have collectively logged thousands of hours listening to customers across the life sciences spectrum, working to gauge the situation, understand the core issues, illuminate the risks if problems go unsolved, and enable solutions that empower the business, and—most importantly—the people running it.

My team and I are happy to help you determine your next steps to reaching your Level 5. Reach out today!